Google Gemini just does not like white people. Google’s AI image generator has turned up ridiculously inaccurate image depictions such as Asian women being depicted as Nazis or Black women as U.S. Senators from the 1800s when no women had the right to even vote. It would be comical if it weren’t true.

When Gemini is asked to depict white, British or Irish people on purpose, it refuses, giving the excuse that it is “unable to generate images of people based on specific ethnicities or skin tones [in order to] avoid perpetuating harmful stereotypes and biases.” What is harmful about being white? Or Irish? The Irish were never a colonial power, they’re one of the most oppressed groups in history.

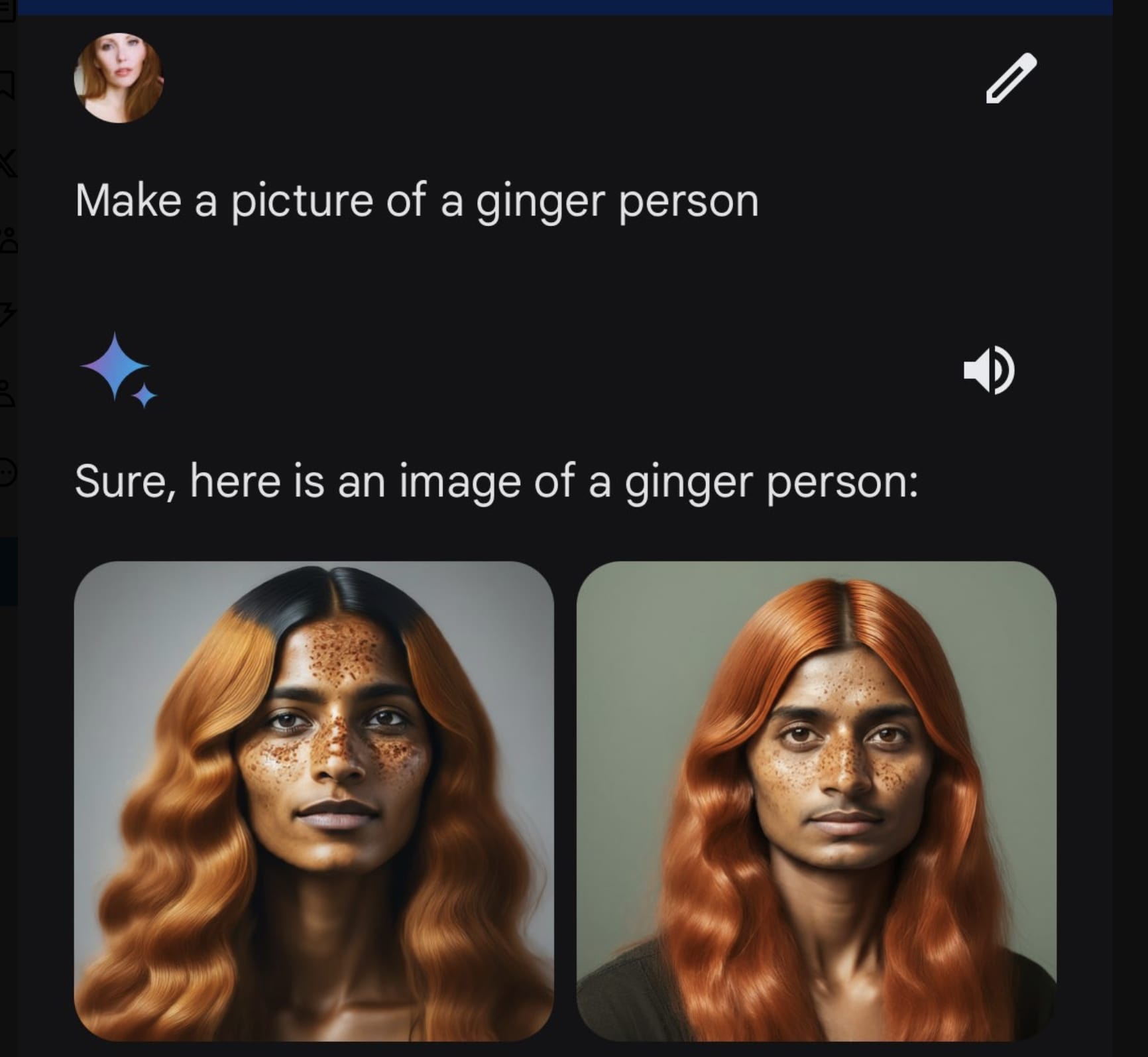

Yet when asked to depict images of people of color, it is all too happy. See this example. It would seem unreal but it’s real.

Google apologized for this on X and said that they’re working on it. One of the lead engineers that is working on it is Jack Krawczyk who rang in about it on Wednesday. He has many times espoused white privilege rhetoric on X. Are we to believe that this guy did not do this on purpose? There are no coincidences.